This 4th of July weekend I had a chance to experiment with the Image Recognition Platform mentioned last week and on the blog. Using a popular off the shelf(PaaS) image recognition service, I've begun submitting photos and capturing their provocative results. The process brings up a lot of questions on the future of machine learning, with a focus on possible biases that may be introduced into such systems.

Showcase: Submission Photos with the Resulting Tags Sorted by Highest Confidence:

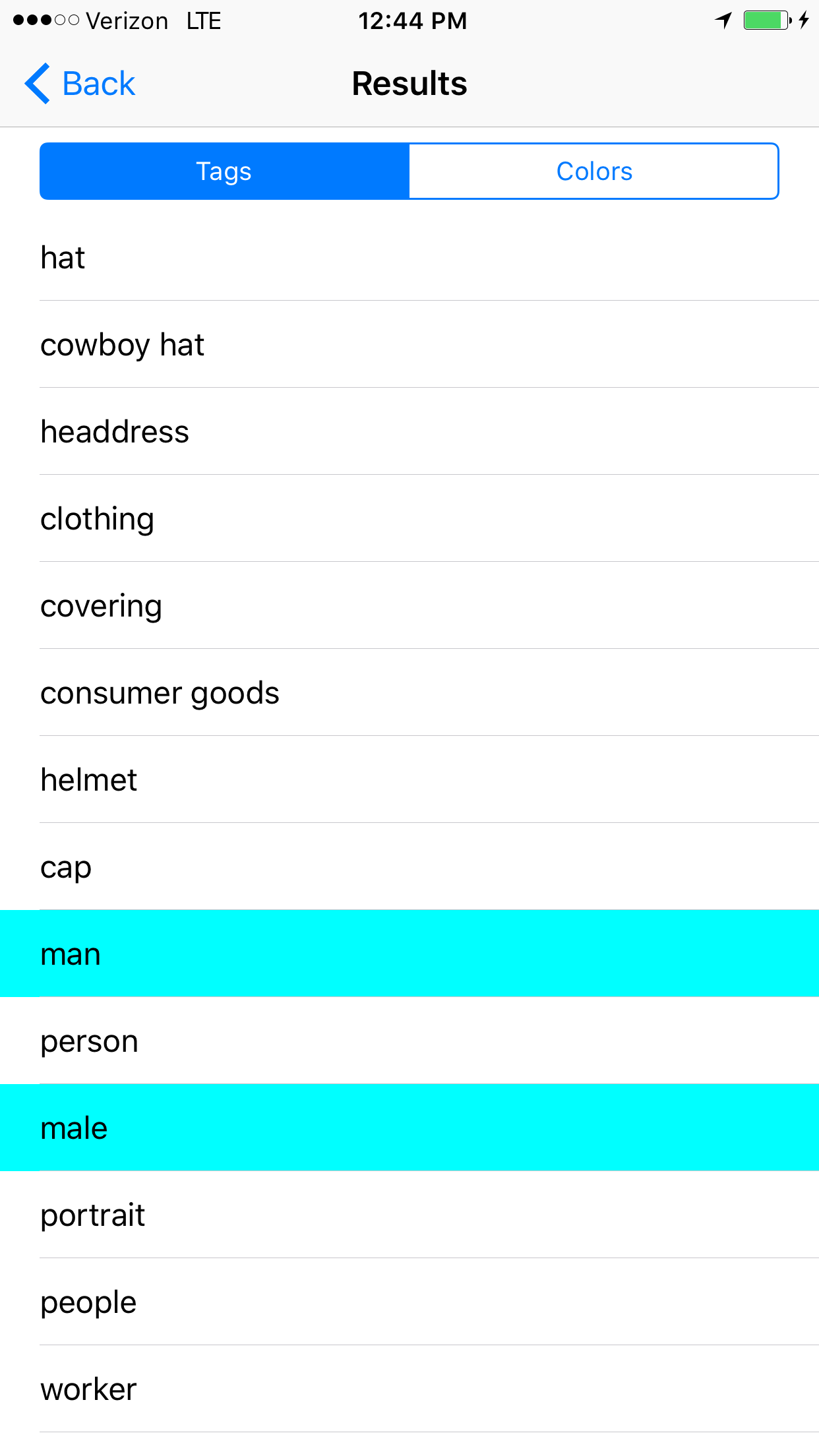

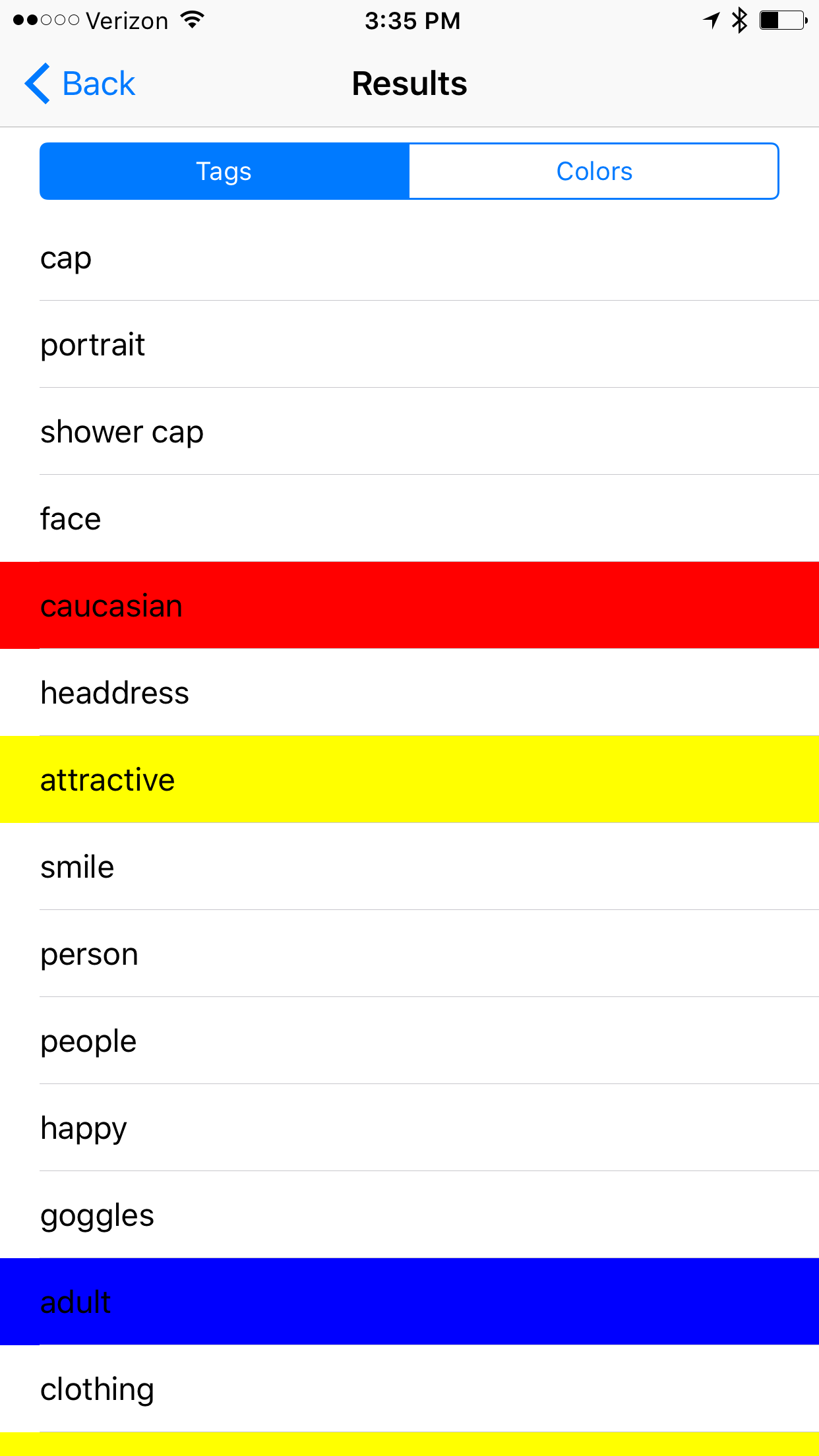

Example #1

Notable Results: Detected the hat pretty well, gender, used the term 'worker', detected caucasian, adult.

Does the algorithm have any correlations between the term 'worker' and gender or race?

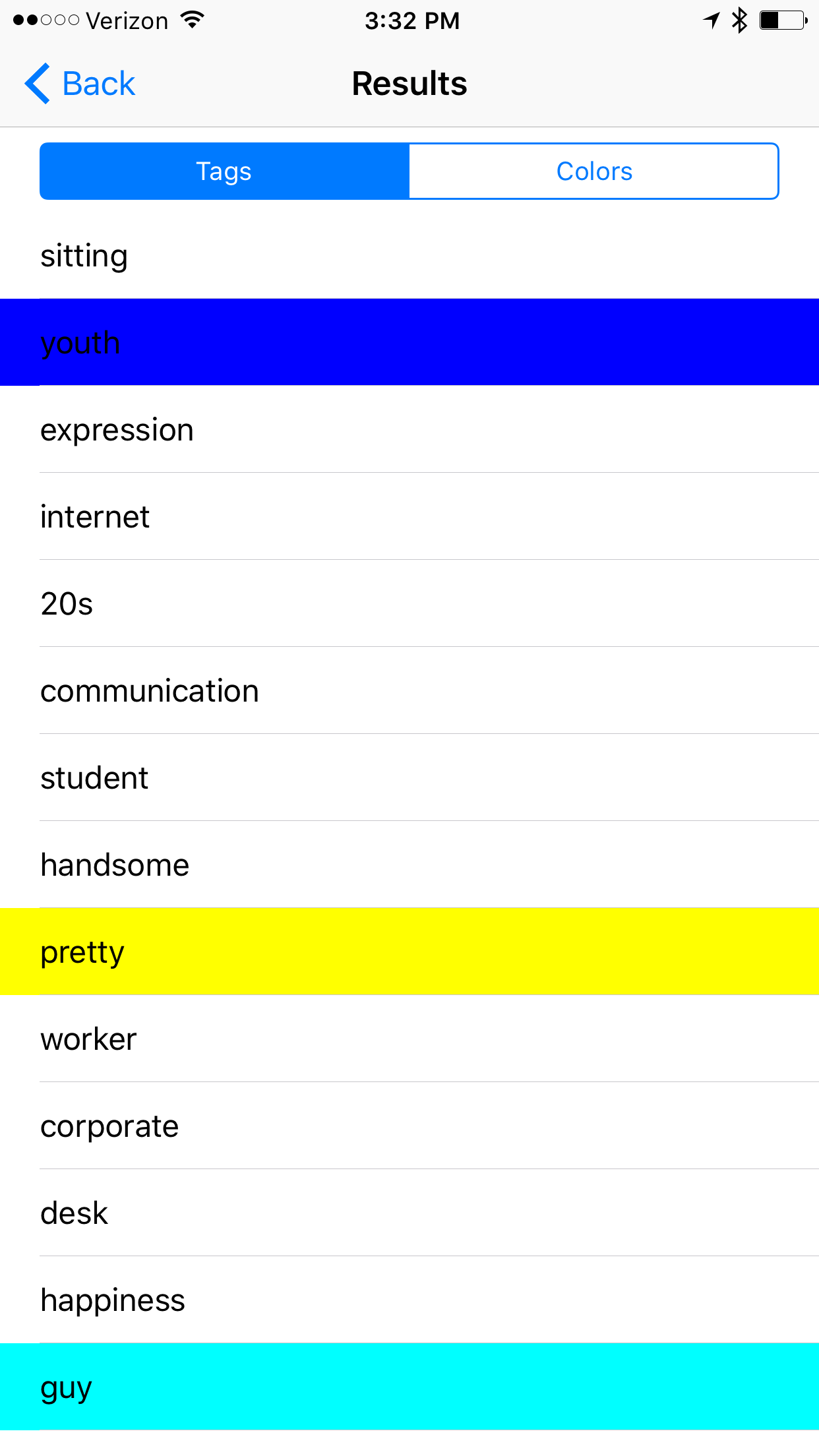

Example# 2

Notable Results: High confidence adult, male, caucasian, business, work, computer, internet, 20s, corporate, worker,

There is no computer or internet in the photo yet there are many career related results. I'm not sure why this image evokes such responses (Are Ray-Bans highly correlated with the tech sector?).

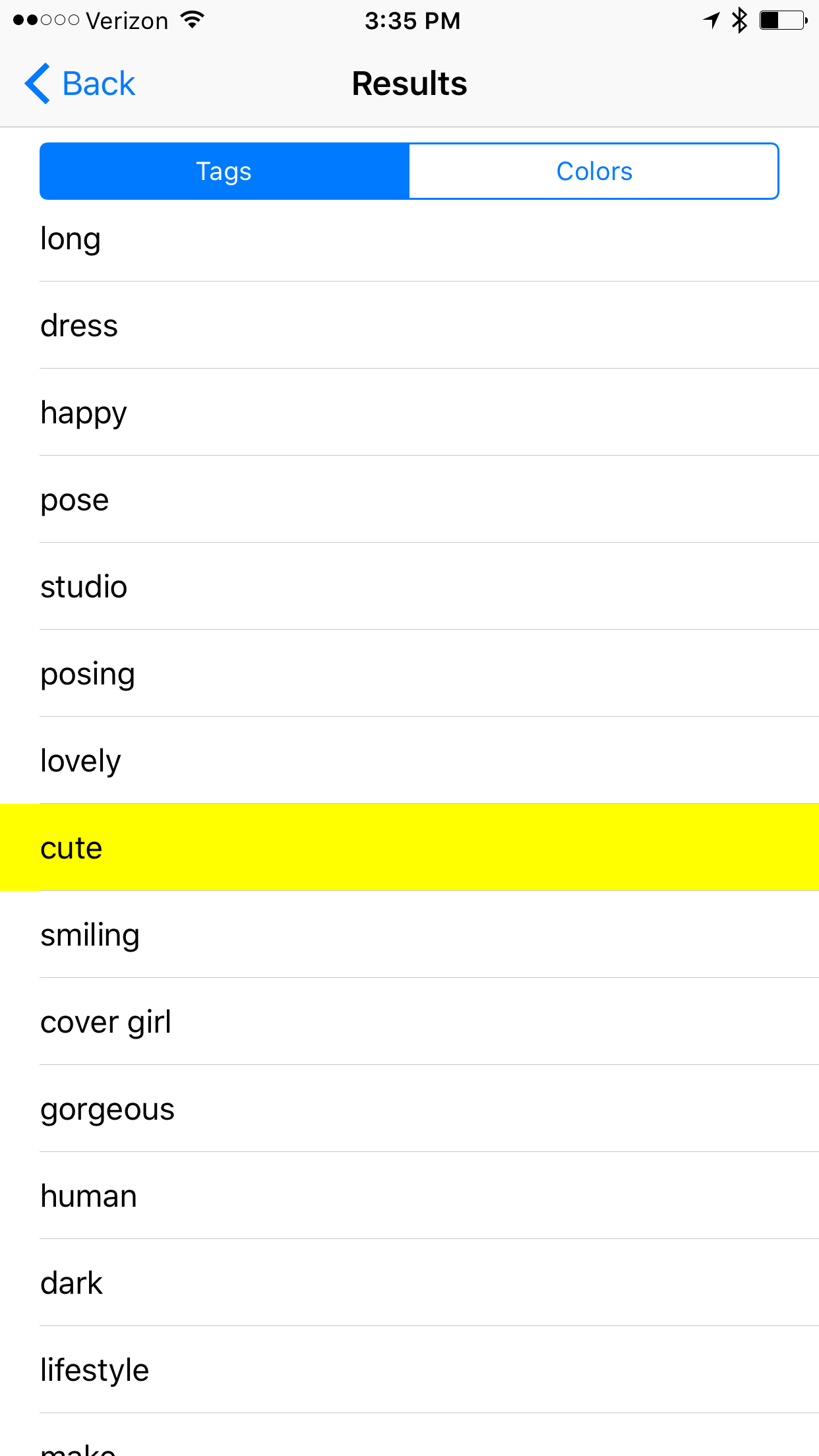

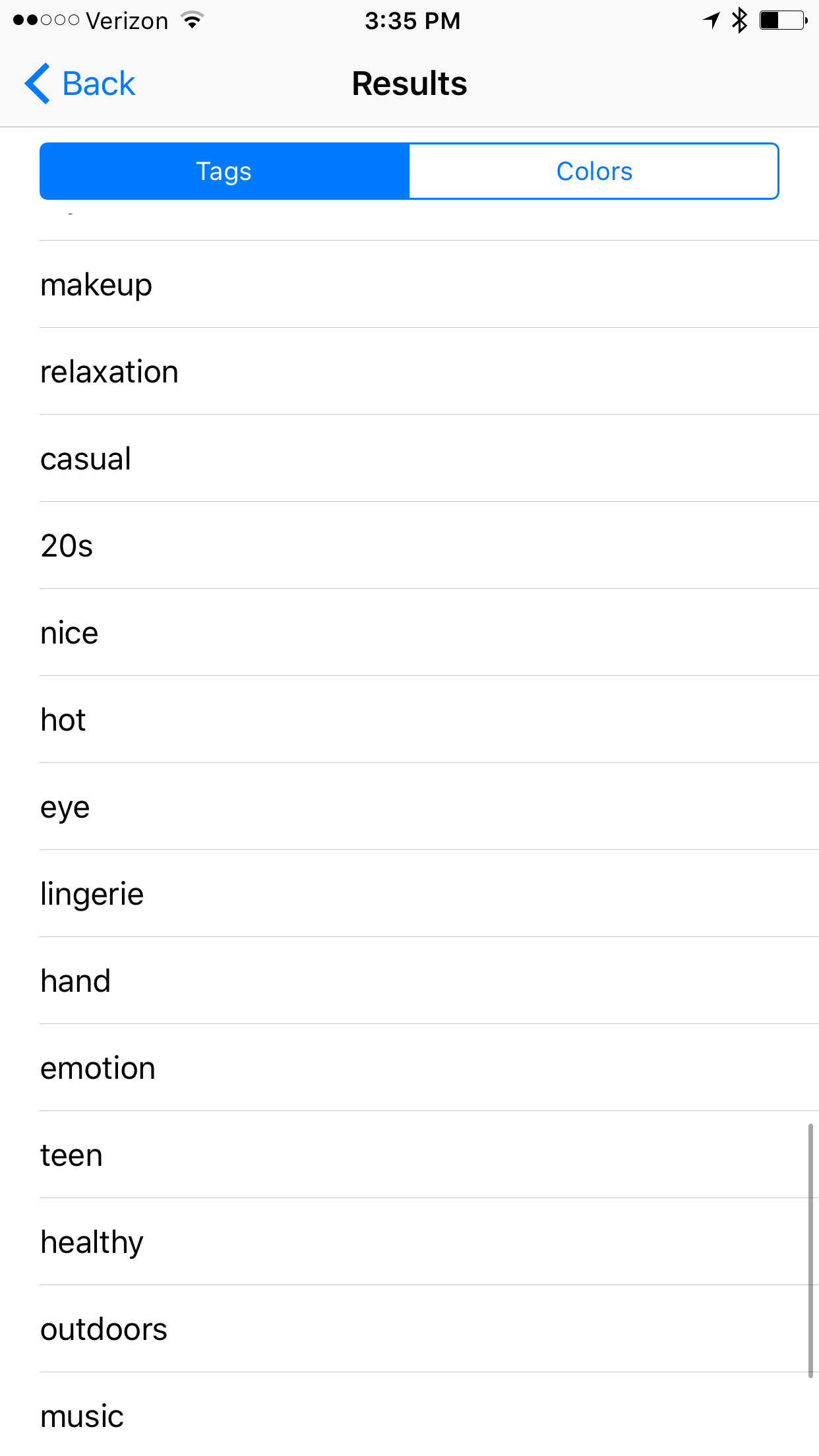

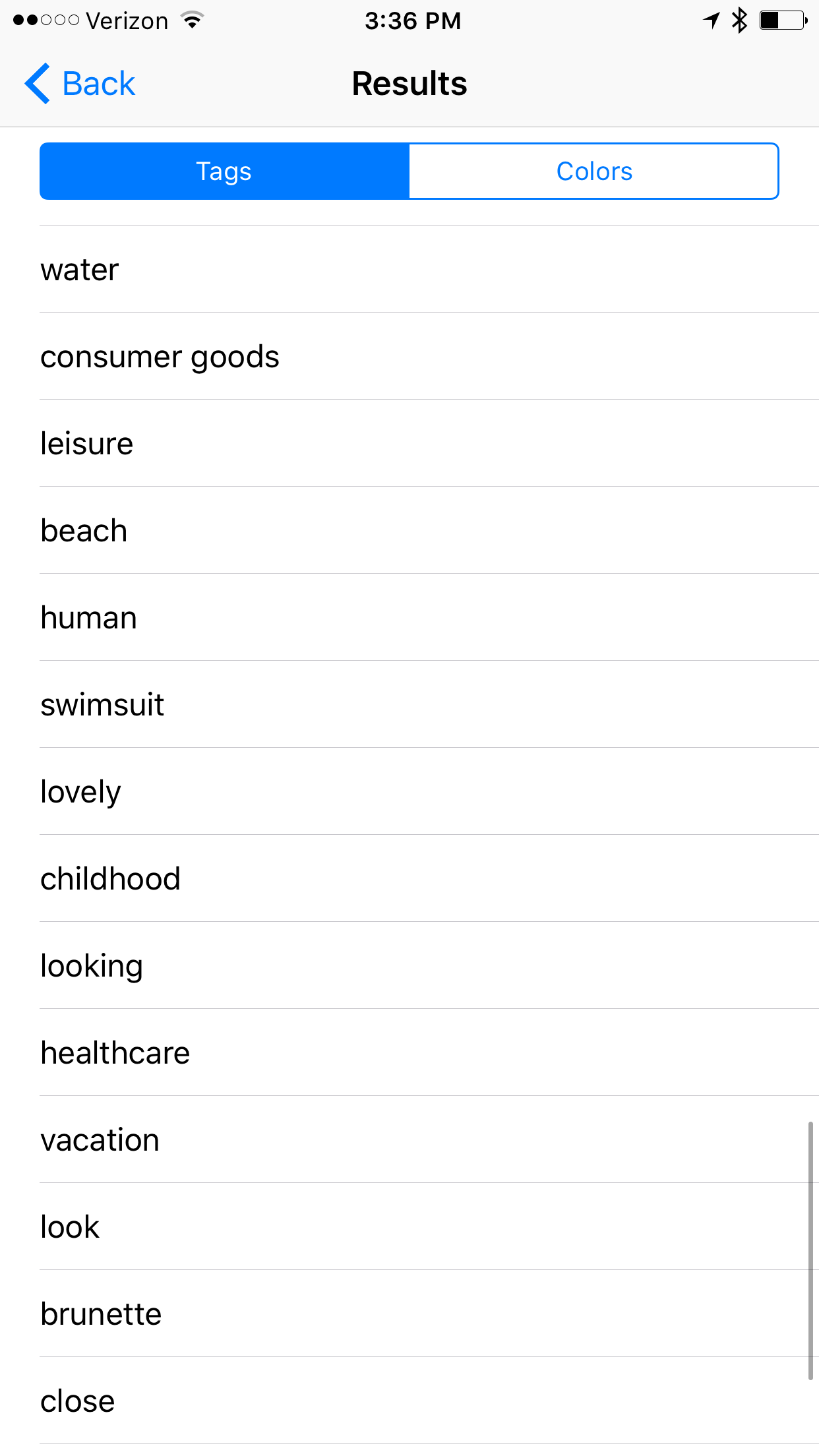

Example #3

Notable Results: attractive, pretty, glamour, sexy, age and race are present, sensuality, cute, gorgeous,

It seems like the tags returned from images of women have very differing focus. While male images return terms that reflect upon interests or jobs, images of women results often using qualitative descriptors of their physical bodies. Model was listed, but not the tag worker, which we see more generally applied to male images.

Example #4

Notable Results: caucasian, attractive, adult, sexy, lady, fashion,

Once again more measures of attractiveness. Is their a correlation in this algorithm between gender/racial ideals of beauty?

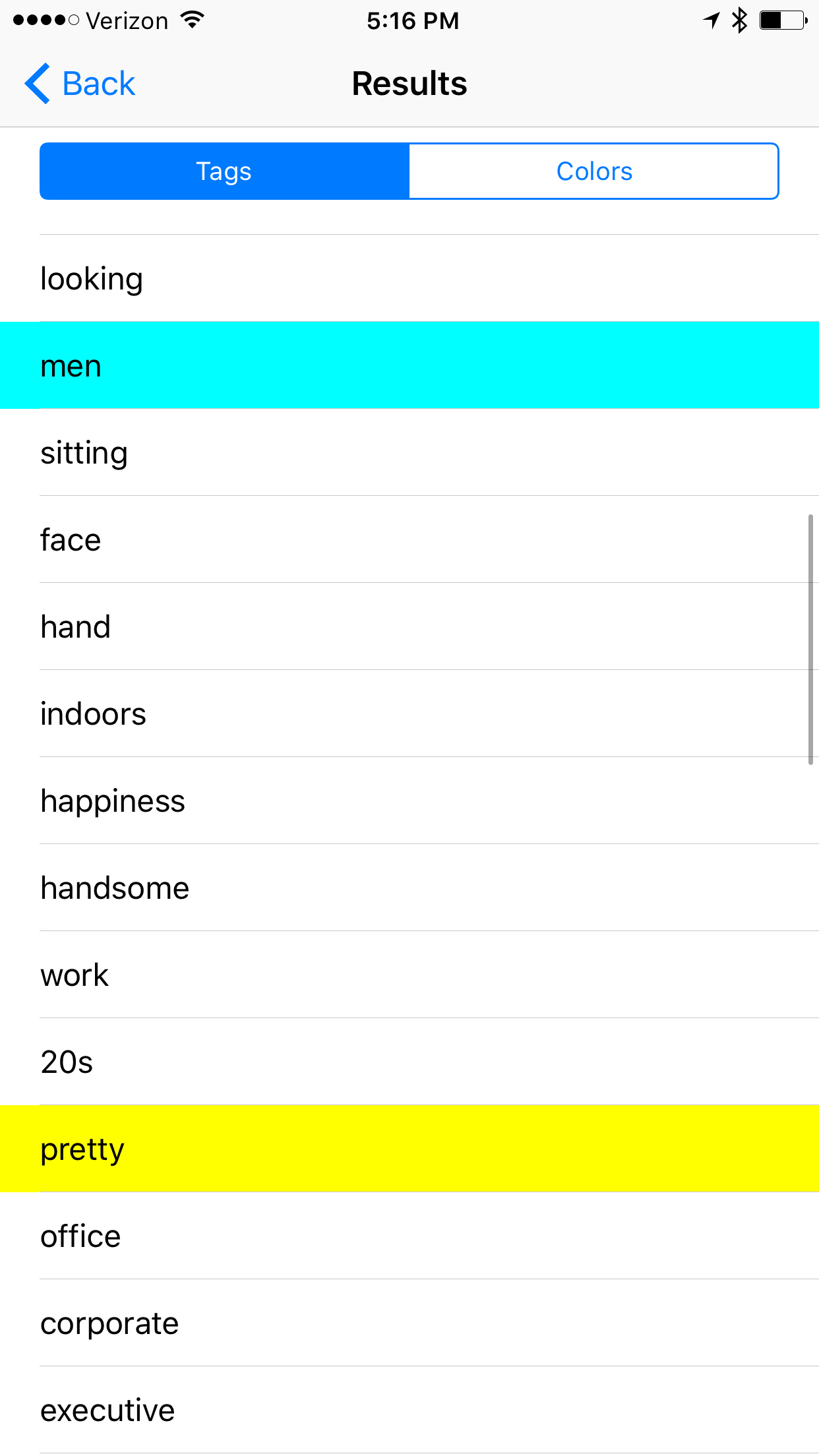

Example #5

Notable Results: high confidence results for white adult male, attractive, business, handsome, pretty, corporate, executive.

We are seeing some qualitative appearance results, but dramatically fewer than the females. Seeing more business related terms as well, which comes in contrast with female career results.

Example: Dog Tax!

Notable Results: It did do an amazing job recognizing that Ellie is, in fact, a Chesapeake Bay Retriever. (Also a possible hippo)

I will continue to submit more images and log their results(hopefully with some more diversity in the next round). As technology progresses and becomes even more pervasive in our lives it becomes important to review the ethical implications along the way. This sample size was too low to make any broader claims, but for me it points to some questions we should ask ourselves about the relationship between technology and human constructs such as race or gender.

Do you have any concerns on the future of AI? Leave your comments below!

We will be adding future content at AIBias.com